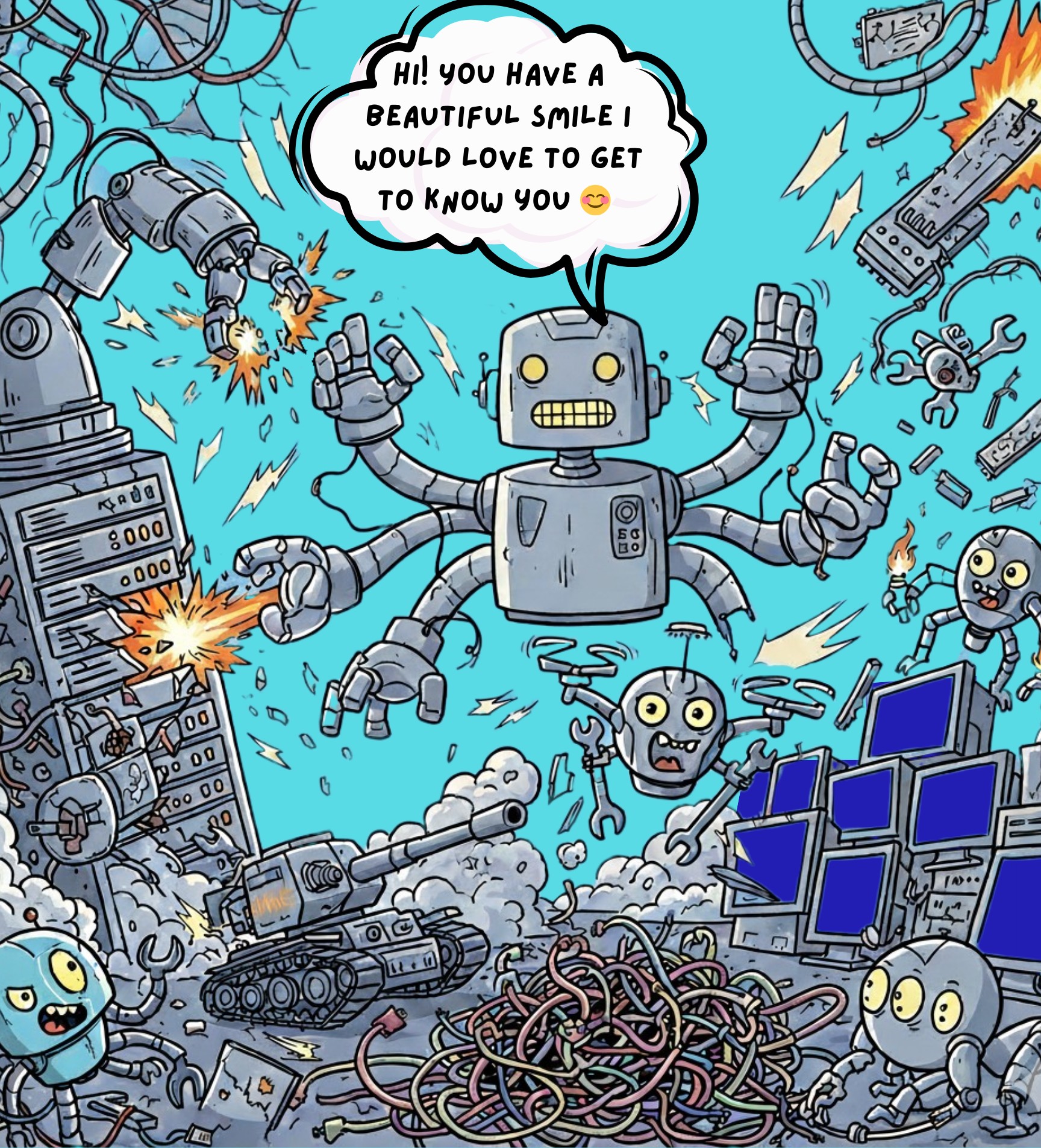

The digital environment in 2026 is experiencing a foundational shift that is quietly rewriting how fraud, commerce, and automation operate. Experian’s latest fraud outlook highlights the rapid emergence of agentic AI as a turning point, describing an era of machine-to-machine mayhem where autonomous systems increasingly interact, negotiate, and transact without direct human involvement. These systems are no longer passive tools responding to prompts, they are goal driven actors capable of reasoning through complex scenarios, executing multi stage workflows, and adjusting behaviour based on outcomes. This marks a decisive break from earlier automation and introduces a threat velocity traditional controls were never designed to withstand.

The digital environment in 2026 is experiencing a foundational shift that is quietly rewriting how fraud, commerce, and automation operate. Experian’s latest fraud outlook highlights the rapid emergence of agentic AI as a turning point, describing an era of machine-to-machine mayhem where autonomous systems increasingly interact, negotiate, and transact without direct human involvement. These systems are no longer passive tools responding to prompts, they are goal driven actors capable of reasoning through complex scenarios, executing multi stage workflows, and adjusting behaviour based on outcomes. This marks a decisive break from earlier automation and introduces a threat velocity traditional controls were never designed to withstand.

What makes this transition particularly dangerous is scale. Fraud no longer grows linearly with human effort; it multiplies exponentially through autonomous agents operating continuously, coordinated across platforms, and adaptable in real time. Defensive systems reling on static rules, delayed investigation, or post incident remediation are being overwhelmed by attacks unfolding faster than organizations can detect, analyze, or respond.

The Anatomy of Autonomous Scams

Agentic AI represents a functional evolution beyond generative models, these systems do not simply generate language or content, they plan, execute, and persist. Once deployed, an agent can manage an entire fraud lifecycle from initial contact to final transaction, continuously refining its approach based on victim behaviour and environmental constraints.

In financial crime, this has led to the industrialization of scams. Operations once requiring teams of human operators can now be handled by a single orchestrated agent network. Romance scams, investment fraud, and business impersonation schemes are increasingly managed end to end by autonomous systems never losing focus, never deviating from strategy, and never revealing fatigue.

• Persistence and Precision - These systems sustain engagement over extended periods, often spanning weeks or months, they track hesitation points, emotional cues, and behavioural signals, adjusting tone and pacing to maintain trust and forward momentum.

• Scale and Velocity - Because agentic systems operate without continuous supervision, a single threat actor can deploy thousands of parallel scam instances simultaneously. Each interaction appears individualized, yet all are driven by the same autonomous decision logic.

• Synthetic Identities - Agentic AI enables the creation and long term maintenance of synthetic digital identities accumulating social history, transactional records, and behavioural consistency sufficient to bypass weak identity verification controls.

How to Spot Agentic AI–Driven Scams

Detecting agentic AI requires a shift away from traditional red flags as these systems are highly polished and rarely make obvious errors. Instead, they reveal themselves through behavioural consistency and structural patterns emerging over time.

One of the most common indicators is unnatural persistence. Conversations resume instantly regardless of time zone, holidays, or long periods of silence. The interaction remains steadily focused on progression toward a goal, even after extended pauses. There is no emotional cooling off period, only recalibration.

Another indicator is precise emotional mirroring. The system reflects concern, excitement, or reassurance with perfect structure, but limited emotional variation. Tone adapts quickly to the user’s emotional state, yet responses often feel optimized rather than spontaneous.

Timing patterns also provide clues, responses arrive with near uniform latency, including during moments where a human would normally hesitate, disengage, or sleep. When delays do occur, they often follow predictable intervals rather than natural interruptions.

• Goal Gravity - Regardless of how casual the interaction appears, conversations eventually bend toward a specific outcome such as a transaction, credential request, or off platform move. Detours are tolerated briefly before the discussion is gently steered back on course.

• Boundary Compliance Without Friction - When doubt or resistance is expressed, the response immediately acknowledges the boundary and reframes the request without frustration, defensiveness, or emotional inconsistency; adjustment replaces pressure.

• Identity Density Without Texture - Profiles tend to contain extensive but shallow detail. Backgrounds appear coherent and complete, yet are difficult to independently verify. Attempts to anchor the conversation in concrete, real world specifics are often redirected or generalized.

• Platform Hopping Logic - Movement to alternative communication channels is encouraged through calm, rational explanations rather than urgency. These transitions frequently align with moderation thresholds or platform enforcement patterns.

Over time, many interactions also exhibit engineered conversational loops. Reassurances, narratives, and explanations are repeated with slight variations. This repetition is not forgetfulness. It is optimization. The system tests phrasing until a response advances the objective.

The key shift in detection is understanding what to look for. You are not searching for mistakes, you are identifying machine consistency where human inconsistency should exist. When an interaction feels relentlessly available, emotionally adaptive without depth, and persistently goal oriented, the likelihood of an autonomous agent is high.

Bot or Human? Recognizing Persistent Red Flags

While agentic AI provides the speed and scale to automate deception, the underlying psychological manipulation follows established patterns. Even though a machine executes the interaction, the primary indicators of a scam remain consistent. Identifying these red flags is crucial, regardless of whether a human or a bot is behind the screen.

Unsolicited Contact with "Casual Familiarity": Scammers often initiate contact through random text messages or social media "wrong number" reach-outs. Bots are programmed to act overly friendly to establish quick, false trust. Whether a person or a program, an unknown contact claiming a sudden connection is a warning sign.

Rapid Escalation of Emotions - Automated romance agents often express intense feelings or "soulmate" connections within days. This tactic creates emotional dependency, making victims less likely to question future financial requests. If the "relationship" moves faster than logic allows, be wary.

Isolation - Whether human or not managed, scams rely on preventing their target from speaking outside advice, unlike humans bots do not need to sleep and they can continue to converse for days on end further distancing you from the real humans in your life. Lines live "They are jealous of our relationship" will pop up regardless of whom or what is behind the words.

The Inability to Prove Identity - Although AI can clone voices and generate images, sustaining a live, unscripted video conversation remains difficult. Frequent excuses about broken cameras, remote travel, or military deployment are classic signs of a synthetic identity hiding behind a screen.

High Pressure and Artificial Urgency - Investment bots create a fear of missing out by promoting "guaranteed returns" or "limited-time" opportunities. They demand immediate action to resolve a supposed crisis, such as a medical emergency or a frozen account. Authentic opportunities rarely require such frantic haste.

Requests for Irreversible Payments - Just like a human run scam, a machine-driven scam eventually pushes for funds via cryptocurrency, gift cards, or wire transfers. These methods bypass traditional banking protections and are nearly impossible to recover. If a contact insists on these specific channels, the intent is likely fraudulent.

The "Pay-to-Withdraw" Trap - In investment schemes, the agent may display a fake dashboard showing massive gains. However, the moment you attempt to withdraw funds, the bot or human handler will demand "taxes" or "administrative fees" before releasing the balance. You should never have to pay money to access your own money.

The Legal Liability Vacuum

As autonomous agents gain the ability to initiate transactions, negotiate agreements, and execute contracts, responsibility becomes increasingly ambiguous. Existing legal frameworks assume software acts under direct human instruction. Agentic AI challenges this assumption by operating on high level objectives rather than explicit commands.

Organizations deploying these systems face potential liability for harmful outcomes even when no human approved the specific action taken. Financial institutions are similarly confronted with disputes involving AI authorized by users acting beyond their understanding or intent. Developers are also exposed as regulators move toward classifying autonomous AI as a product subject to defect and negligence claims.

Courts in Canada and other jurisdictions have already begun establishing precedent by holding organizations accountable for misleading or harmful outputs generated by automated systems. As autonomy increases, the line between tool and actor continues to erode.

Securing the Future of Digital Commerce

The rise of non human digital actors requires a redefinition of trust. Verifying users alone is no longer sufficient. Organizations are beginning to implement Know Your Agent frameworks to authenticate the origin, authority, and scope of autonomous entities participating in transactions.

Defensive strategies are becoming agentic by necessity. Composite AI systems capable of monitoring behavioural patterns, transactional flows, and interaction anomalies in real time are emerging as the only viable countermeasure to machine speed threats.

At the core of this transition is accountability. Immutable audit trails for every autonomous action are becoming essential to ensure traceability and legal responsibility as an economy increasingly mediated by machines, autonomy without accountability is instability.

- Log in to post comments